RICE Framework

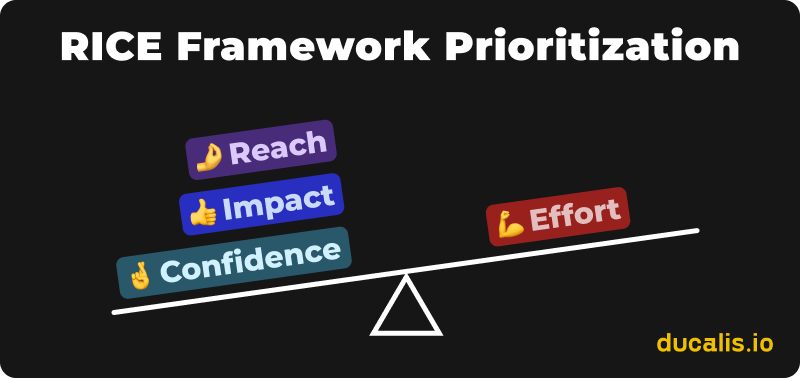

RICE Framework, dört faktörü değerlendirerek projeleri, özellikleri ve hipotezleri önceliklendirmenize yardımcı olan bir puanlama modelidir: Erişim, Etki, Güven ve Efor.

RICE Puanı Nedir

RICE Framework, projeleri dört kritere göre puanlar:

- Erişim - Belirli bir süre içinde bu kaç kişiyi etkileyecek

- Etki - Müşteriler bununla karşılaştığında hedefinizi ne kadar etkileyecek

- Güven - Erişim ve Etki tahminlerinize ne kadar güveniyorsunuz

- Efor - Bu, ekibinizden ne kadar zaman gerektirecek

Framework, üzerinde çalışılacak en değerli çözümleri belirlemenize yardımcı olur.

RICE Puanını Hesaplayın

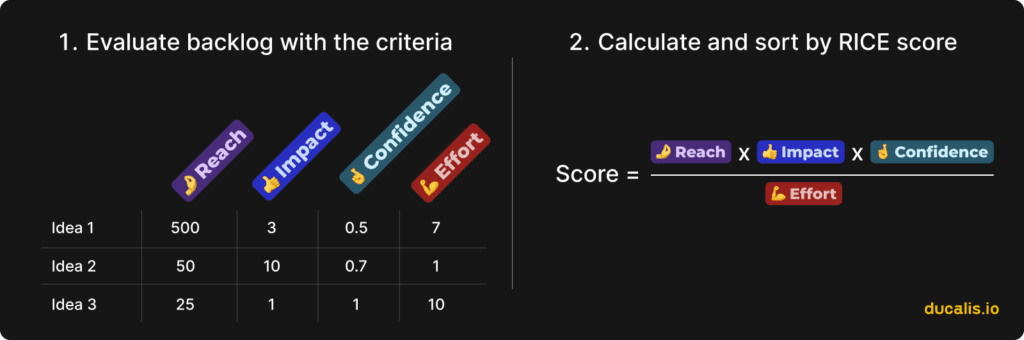

RICE puanını hesaplamak için:

- Erişim × Etki × Güven değerlerini çarpın

- Sonucu Efor'a bölün

Projelerinizi toplam puana göre azalan sırada sıralayın. Daha yüksek puanlar, yatırılan zamana göre daha fazla değer gösterir ve kimi, neden, nasıl ve ne zaman etkileyeceğinizi anlarken etkili işlere odaklanmanıza yardımcı olur.

Erişim

Belirli bir süre içinde bu özellik kaç kişiyi etkileyecek?

Dönem başına kişi veya etkinlik sayısını herhangi bir pozitif sayı kullanarak tahmin edin.

Erişim, fikrinizin kaç potansiyel müşteri ve kullanıcıyı etkileyeceğini ölçer. Bir kayıt sayfası her potansiyel müşteriyi etkilerken, gelişmiş bir özellik yalnızca deneyimli kullanıcıları etkiler.

Etki

Bir müşteri bununla karşılaştığında bu özellik hedefinizi ne kadar etkileyecek?

Puanlama:

- 0.25 = Minimal

- 0.5 = Düşük

- 1 = Orta

- 2 = Yüksek

- 3 = Yoğun

Etki, belirli bir hedef üzerindeki etkiyi ölçer. Puanlama yaparken tek bir hedefe odaklanın. "Dönüşümü 2 artırır"ı "benimsemeyi 3 artırır" ve "memnuniyeti maksimize eder 2" ile karşılaştırmak anlamsız karşılaştırmalar yaratır.

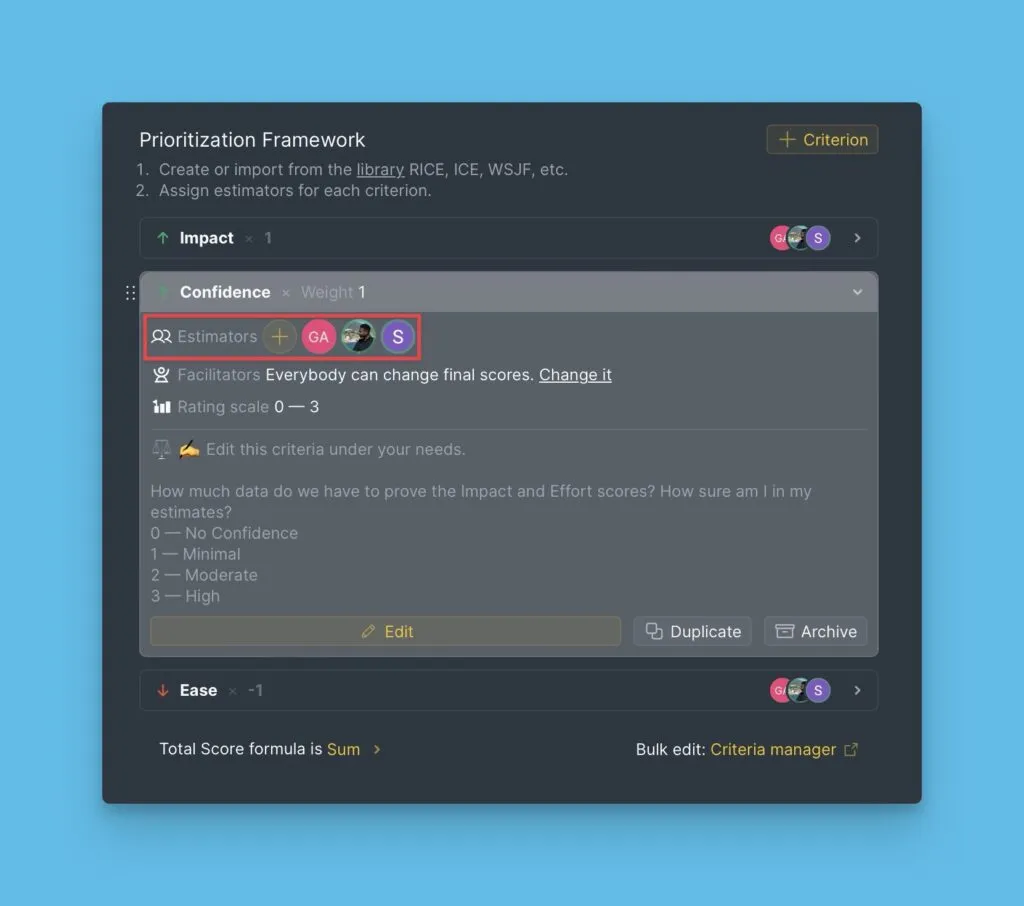

Güven

Erişim ve Etki tahminlerinize ne kadar güveniyorsunuz?

Puanlama:

- 20% = Ay'a yolculuk

- 50% = Düşük Güven

- 80% = Orta Güven

- 100% = Yüksek Güven

Güven, verilerinizin gücünü yansıtır. Yalnızca sağlam destek verileriniz olduğunda 100% puan verin. Bu kriter önceliklendirmeyi daha veri odaklı ve daha az duygusal hale getirir.

Efor

Bu özellik tüm ekipten ne kadar zaman gerektirecek: ürün, tasarım ve mühendislik?

"Kişi-ay" temsil eden herhangi bir pozitif sayı kullanarak tahmin edin.

Efor, uygulama için gereken zamanı ölçer. Bu, Değer/Efor dengesini tamamlar ve Hızlı Kazançları ortaya çıkarmaya yardımcı olur.

Ücretsiz Ducalis hesabı oluşturun

Ücretsiz RICE şablonunu indirin

Ücretsiz RICE şablonunu indirin:

RICE Framework'ü geliştirmek için 8 ipucu

Bu öneriler basit ve uygulanabilir. Birkaçını veya tümünü uygulayabilirsiniz—hepsini uygulamak maksimum fayda sağlar.

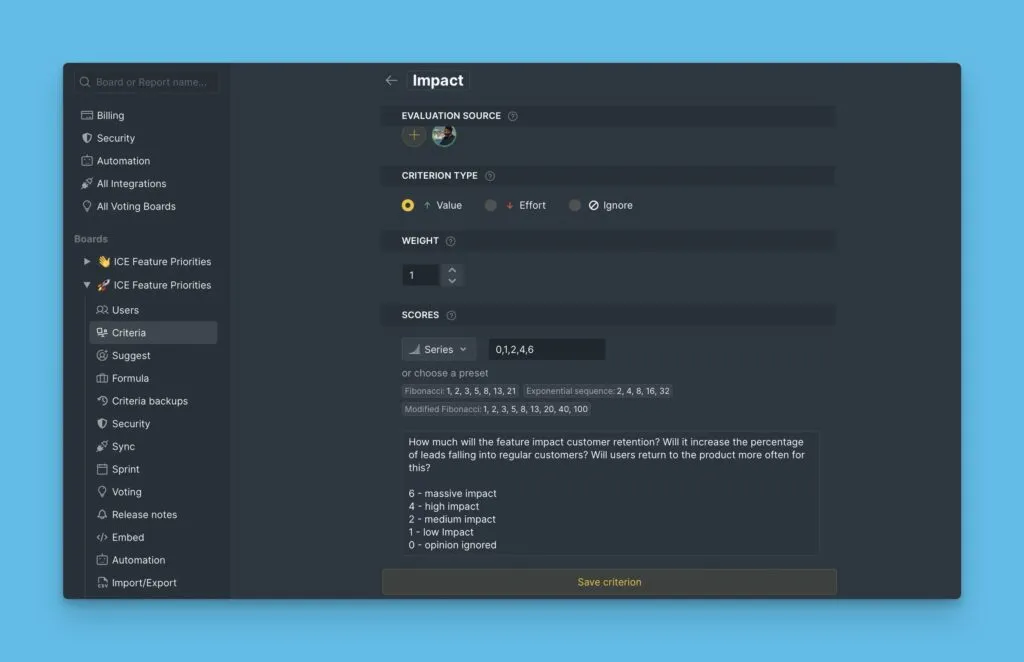

1. Ürününüz için kriter anlamını belirtin

RICE kriter açıklamaları kasıtlı olarak geneldir. Etki "Bu hedefe ne kadar etki edecek?" diye sorar ama hangi hedefe dair belirtmez. Özelleştirme olmadan hedefinizi sürekli hatırlamanız gerekir, bu da tahmini yavaşlatır. Zihniniz dağılacak ve farklı hedefleri etkileyen görevlere yüksek veya düşük Etki puanları atayacaksınız.

OKR'ler veya iş metrikleri formüle ettiyseniz, bunları açıklamaya ekleyin. Müşteri elde tutma odağı için:

Bu özellik müşteri elde tutmayı ne kadar etkileyecek? Potansiyel müşterilerin düzenli müşterilere dönüşüm yüzdesini artıracak mı? Kullanıcılar ürüne daha sık dönecek mi?

Etki'yi "Elde Tutma" olarak yeniden adlandırabilirsiniz—kimse sizi yargılamayacak.

Aynı şey Efor için de geçerlidir. "Kişi-ay" büyük projelere uygundur, ancak hızlı büyüme ekipleri "kişi-gün", "kişi-hafta" veya "kişi-saat"ten faydalanır.

Şablonlar:

Özellik önceliklendirmesi için özelleştirilebilir RICE şablonu

Pazarlama önceliklendirmesi için özelleştirilebilir RICE şablonu

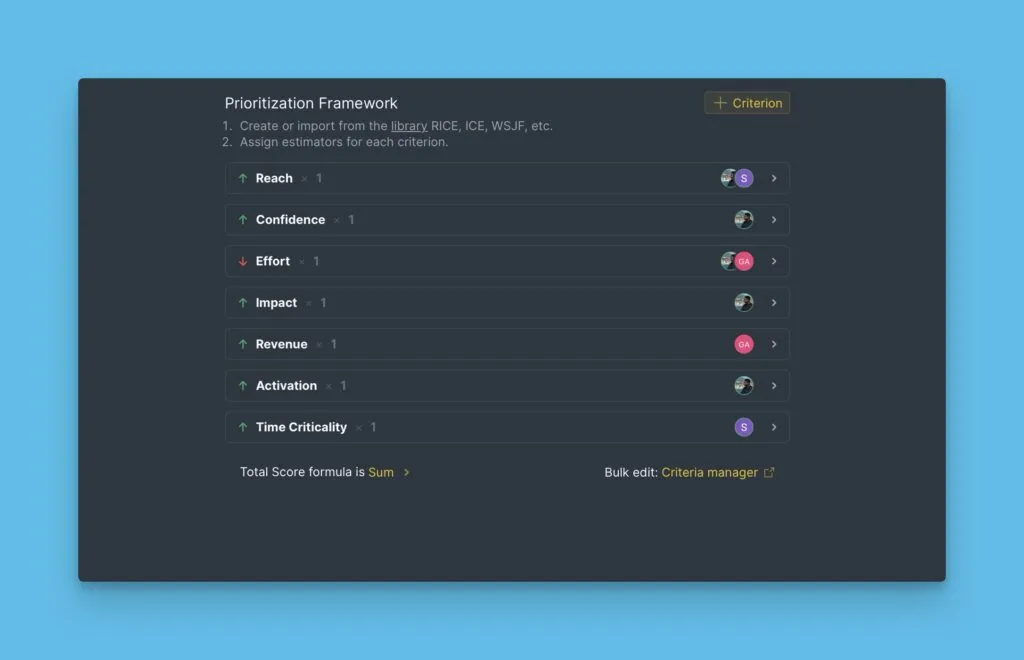

2. Hedefleriniz için özel faktörler ekleyin

Muhtemelen birden fazla hedefiniz var, sadece yükseltilecek tek bir metrik değil. Etki tek başına tüm iş boyutlarını yakalayamaz.

Kapsamlı değerlendirme için diğer değerleri ekleyin:

- İş metrikleri - Gelir, Müşteri Edinme Maliyeti

- Ürün metrikleri - Aktivasyon, Elde Tutma

- Negatif metrikler - Riskler, Promosyon Maliyetleri

Framework'ler mükemmel bir başlangıç temeli sağlar. Özelleştirme önceliklendirmeyi bozmaz—önceliklendirmenin daha iyi çalışmasını sağlar.

3. Daha hızlı önceliklendirmek için puan ölçeklerini birleştirin

RICE kriterleri tutarsız ölçekler kullanır:

- Erişim - Gerçek kullanıcı metrikleri

- Etki - Öznel sayılar

- Güven - Yüzdeler

- Efor - Geliştirme günleri

Bu çeşitlilik tahmini yavaşlatır ve tutarsızlık yaratır.

Erişim için veri toplamak zaman alır ve sayılar zaten yaklaşık kalır. Önceliklendirme hızlı karar vermenize yardımcı olmalı, karar vermeye hız katmalıdır. Mükemmel doğruluk mükemmel öncelikleri garanti etmez.

Tüm kriterler için aynı ölçeği kullanın. Popüler diziler şunları içerir:

- 0-3 ölçeği

- 0-10 ölçeği

- Fibonacci - 1, 2, 3, 5, 8

- Üstel - 1, 2, 4, 6, 8

Bir dizi seçin ve ona bağlı kalın. Tüm kriterleri aynı sayılarla tekrar tekrar puanlamak, sonra sonuçları görmek, tahmin için güvenilir sezgi geliştirir.

Güvenilirliği daha hızlı oluşturmak ve öznelliği azaltmak için kriter açıklamalarına puan anlamları ekleyin:

Erişim - Belirli bir süre içinde bu özellik kaç kişiyi etkileyecek?

- 1 = 100'den az kişi

- 2 = 100-300 kişi

- 3 = 300-600 kişi

- 5 = 600-900 kişi

- 8 = ~1.000+ kişi

4. Uzman değerlendirmesi için çeşitli görüşler toplayın

Tek başına tahmin dezavantajları vardır.

İlk olarak, uzman girdisini kaçırırsınız. Mühendisler geliştirme zamanını doğru tahmin eder. PM'ler kullanıcı etkisini daha iyi tahmin eder. Paydaşlar iş etkisini anlar.

İkinci olarak, ekip arkadaşlarınızın kriterleri tahmin etmesini sağlamak, onları görevleri hakkında daha bilinçli hale getirir. Kullanıcı ve iş ihtiyaçlarını anlamak günlük karar vermeyi etkiler, ekip netliği ve paylaşılan deneyim yaratır.

Kriterleri uzmanlıklarına göre ekip üyeleri arasında bölün. Bazı kriterleri işbirliği içinde değerlendirin.

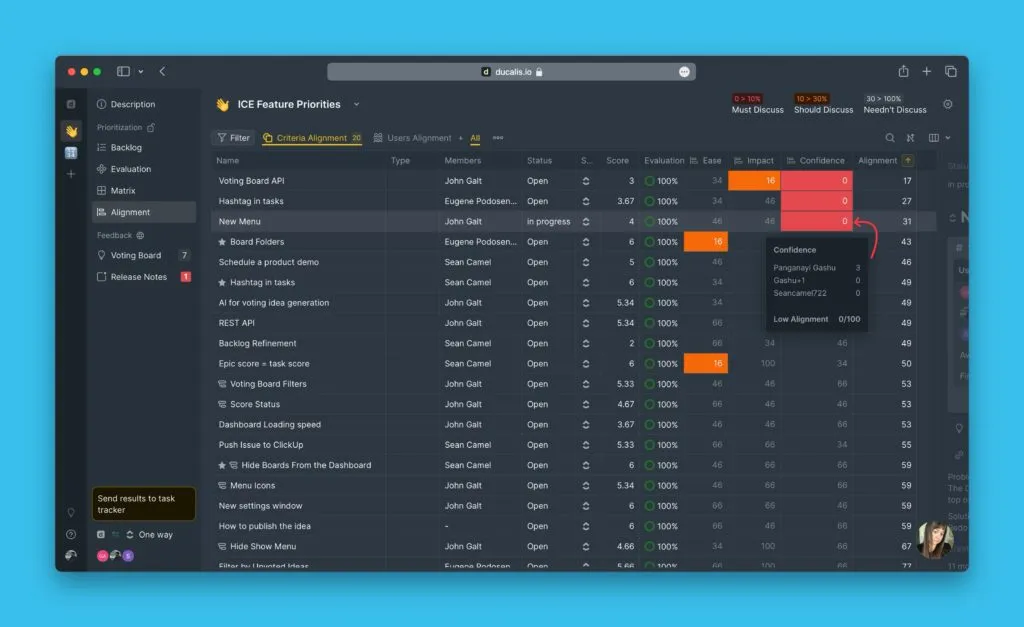

5. Tahmin netliği için puan dağılımını kontrol edin

İşbirlikçi puanlamadan sonra ekip arkadaşlarınızın puanlarını karşılaştırın. Birinin şunları keşfedebilirsiniz:

- Diğer ekip üyelerinin dikkate almadığı benzersiz bir bakış açısı var

- Projeyi veya kriterin arkasındaki hedefi anlamıyor

Bazen tüm puanlar farklıdır, bu da ekibin projeyi veya kriterleri anlamadığını gösterir. Bu egzersiz, hedefler etrafında ekip hizalamasındaki boşlukları ortaya çıkarır. Paylaşılan anlayışa ihtiyacınız var—roket inşa ederken füze istiyorsunuz, uçan daire değil.

Sorunları tespit etmek için yalnızca dağınık puanlara sahip projeleri veya kriterleri tartışın. Tüm iş listesini birlikte tartışmaya gerek yok. Bu, koordinasyon çalışmasında zaman kazandırır.

Ducalis tartışma için geniş puan farklılığı olan görevleri ve kriterleri vurgular.

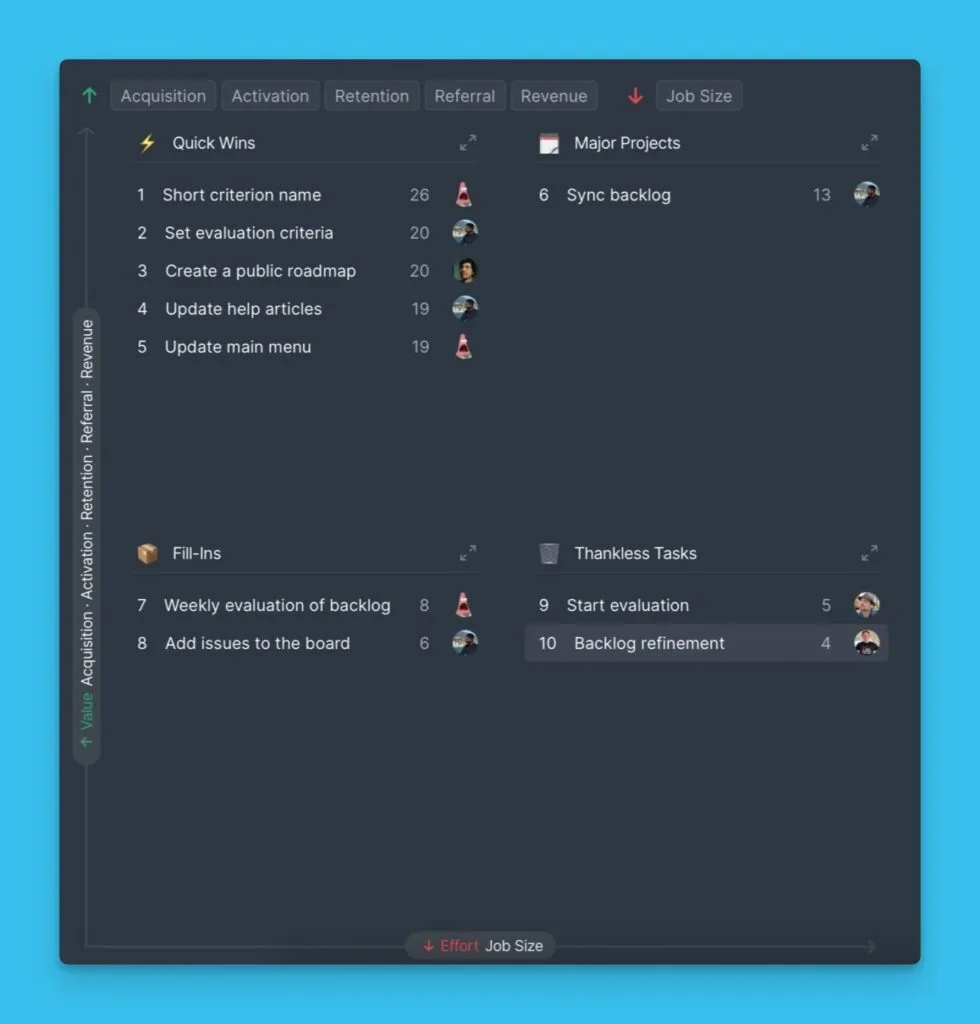

6. Proje etkisini görselleştirmek için matris kullanın

Bir 2×2 matris, bir sonraki ne geliştirileceğine karar verirken ürün iş listenizi görselleştirmeye yardımcı olur. Sıralı listeler kullanışlıdır, ancak Hızlı Kazançlar nerede biter ve Büyük Projeler nerede başlar? Projeleri dört kadrana dağıtmak, iş listesini hız ve sonuçların önemi temelinde dört kategoriye görsel olarak bölerek sprint planlamasını iyileştirir.

En iyi projeleri bulmak için hem liste hem de matris öncelik görünümlerini kullanın.

Birden fazla pozitif kriteri tahmin etmek ve matris filtrelerini kullanmak, farklı hedefler için düşük asılı meyveleri ortaya çıkarmanıza yardımcı olur. Bu, aynı anda birden fazla metriği idare ederken ve büyütürken kullanışlıdır. Aktivasyon ve Elde Tutma için Hızlı Kazançlar seçebilirken aynı zamanda Gelir'e de fayda sağladıklarından emin olabilirsiniz.

Tüm temel kriterleri değerlendirin ve belirli bir zamanda en çok odaklanılması gerekenleri veya belirli bir metriği yükselten görevleri bulurken filtreyin.

7. Bilinçli karar verme için öncelikleri tartışın

Ekibiniz OKR'lerini bildiğinde, gelecekteki projeleri puanladığında, anlaşmazlıkları çözdüğünde ve öncelikler listesi hazır olduğunda, bir sonraki ne üzerinde çalışılacağına karar verme zamanıdır. Bu, sadece en üstteki projeleri alıp ilerlemek anlamına gelmez.

Sprint planlaması sırasında öncelikleri tekrar gözden geçirin ve hangi projelerin sevk edilmesinin en iyi olduğunu belirtin. Her ekip üyesi şunu açıklamalıdır: "X projesini uyguluyorum çünkü sonuçlar Y müşterileri etkileyecek ve Z hedefini etkileyecek"—sadece "Bunu yapacağım çünkü en üstte" değil.

Ekibin ne yaptığını, kimin için ve neden anlamak, doğru kararları vermenin anahtarıdır—ve dolayısıyla sağlam büyüme ve gelişim.

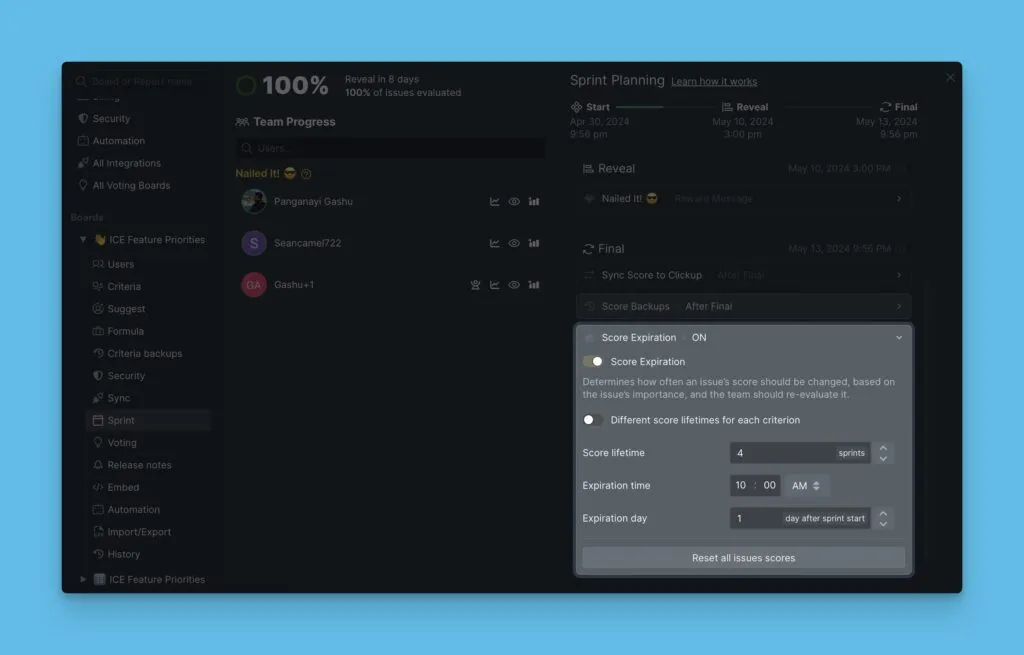

8. İlgililik güncellemesi için projeleri yeniden değerlendirin

Bir keresinde dokuz yıldır iş listesinde bekleyen bir görevden bahsedildiğini duyduk. Hikayeyi tekrarlamak istemiyor musunuz? İş listenizi düzenli olarak yeniden değerlendirin.

Öncelikler hızla değişir, bazen bir gecede. Bir proje başlangıçta en üste ulaşmayabilir ancak birkaç sprint içinde değerli hale gelir. İş listenizin altındaki harika fikirleri kaybetmek istemiyorsanız, yeni koşullara göre zaman içinde yeniden değerlendirin.

Birkaç geliştirme sprint'inden sonra otomatik puan temizleme ayarlayın.

Yeniden değerlendirme ayrıca iş listesi çöpünü bulmaya yardımcı olur. Bir proje döngü döngü düşük puanlar alıyorsa, bu bir kırmızı bayraktır—fikri daha değerli hale getirmek için yeniden düşünün veya silin.

Ducalis'de kaydolun, görev izleyicinizle 2 yönlü senkronizasyon bağlayın, önceliklendirme sürecinizi otomatikleştirin ve ekip değerlendirme alışkanlığı oluşturun.